DIVINE GENESIS

Exploring Creation through Astronomy and Biology

Introduction

Scientists who advocate for the theory of evolution often regard creationism as lacking empirical support and scientific rigor. They contend that creationism should not be included in science curricula, as it fails to offer a scientifically substantiated explanation for the diversity and complexity of life on Earth.

On the other hand, evolutionary theory contains gaps and unanswered questions, particularly regarding the origin of life and the complexity of biological systems. Natural selection and mutations are insufficient to explain the intricate structures and functions observed in living organisms. Furthermore, evolutionary theory applies only to existing living organisms and does not address the origin of life. Additionally, it relies heavily on assumptions and speculative reconstructions, thereby challenging its validity as a comprehensive explanation for the diversity of life.

This book is written to explore the debate between creation and evolution by discussing the creation of the universe, the uniqueness of the Earth, and the origin of life.

In the first part, we will introduce the hierarchical structure of the universe and discuss the creation of the universe as revealed by astronomical observations. Then, we will exam whether the creation of the universe described in the Bible aligns with the astronomical findings, whether the Earth's age is 6,000 years, and take a closer look at the fine-tuned nature of the universe.

The second part presents ten amazing facts about the Earth, emphasizing its unique suitability for supporting life and pointing to evidence of purposeful design.

In the third part, the origin of life is explored, challenging conventional evolutionary theories and highlighting the complexity of biological systems as evidence for divine creation. The adequacy of the term "Darwin's theory of evolution" is examined, followed by an investigation into whether humans evolved from apes. Additionally, the concept of intelligent design is introduced, and creationism is explored through discussions on particle physics, the existence of extraterrestrial life, the instincts of animals, and the mathematics found in nature.

The book concludes with a heartfelt invitation to faith, encouraging readers to reflect on their spiritual journey and consider the transformative power of belief. It introduces the gospel and provides practical guidance on how to embrace faith, including steps to understand and receive eternal life, offering hope and assurance for those seeking a deeper connection with God.

I hope this book provides renewed knowledge of creation, deepening your understanding of the intricate design and purpose woven into the universe, and offers an opportunity to meditate on the boundless grace, wisdom, and power of God, the divine Creator, who sustains all things and invites us to marvel at His handiwork.

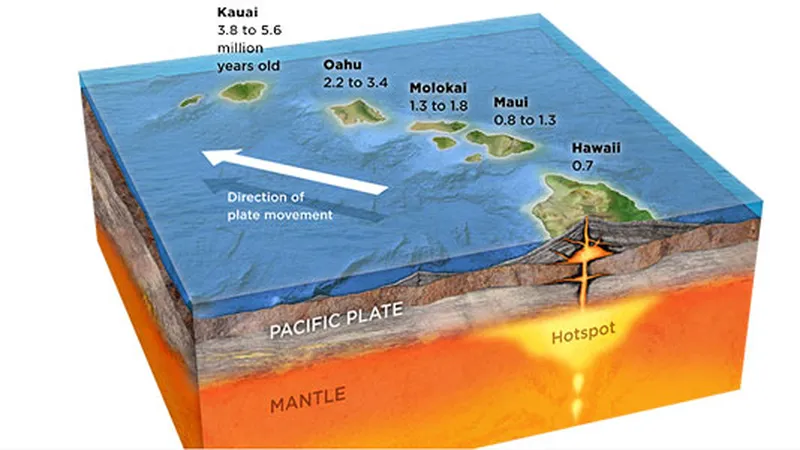

Content 1. The Creation of the Universe As a child, you may recall nights spent camping in the countryside or high in the mountains, gazing at countless stars shimmering in the vast expanse above, or marveling at shooting stars streaking gracefully across the dark sky. Such experiences often fill us with awe and wonder, a profound appreciation for the immense beauty and scale of the universe. In those moments, you might have felt a deep connection to the cosmos, accompanied by a sense of humility about your place within it. Questions may have stirred in your mind: How many stars fill the sky? Could there be life beyond our world? How did the universe begin, and how might it end? Who created it all? The breathtaking beauty and enigmatic nature of the night sky spark curiosity, inviting reflection on the origins of the universe and our purpose within it. These moments of fascination leave an enduring imprint, inspiring us to seek answers to life’s greatest mysteries. In this chapter, we will explore the origin of the universe from both astronomical and Biblical perspectives. We will provide scientific support for the creation record in Genesis by comparing these two viewpoints. Additionally, we will examine which was created first, the Earth or the Sun, whether the Earth is 6,000 years old, and the concept of a fine-tuned universe. a. The Hierarchical Structure of the Universe To discuss the origin of the universe, let's first explore its hierarchical structure. We will start with our solar system and move on to Galaxy, external galaxies, cluster of galaxies, superclusters, and supercluster complexes. i. The Solar System The solar system consists of a star called the Sun, eight planets orbiting it, the asteroid belt between Mars and Jupiter, the Kuiper Belt, and the outermost member, the Oort Cloud. A star is defined as a self-luminous celestial body powered by nuclear fusion, while a planet is a celestial body that reflects light from a star. Earth is the third planet from the Sun. The distance from Earth to the Moon is 384,000 km, taking 16 days by airplane at 1,000 km/h. The distance from Earth to the Sun is about 150 million kilometers, or one astronomical unit (AU), which would take 17 years by airplane. The distance to Neptune is 30 AU, the Kuiper Belt is 30 to 50 AU, and the Oort Cloud is 2,000 to 200,000 AU. At the speed of light, it would take 8.3 minutes to travel from Earth to the Sun, 4 hours to Neptune, and 9.5 months (0.79 light-years) to reach the inner edge of the Oort Cloud. By airplane, it would take about 850,000 years. Fig. 1.1. Solar system including the Kuiper Belt and Oort Cloud Comets can be classified as short-period and long-period comets. The Kuiper Belt is the source of short-period comets, and the Oort Cloud is the source of long-period comets. Due to their origins, comets have highly elliptical orbits with large eccentricities. The Sun is 109 times the size of Earth, 333,000 times its mass, and has a rotation period of about 25 days. ii. The Stellar System Upon leaving the Oort Cloud, you enter the realm of stars. The closest star to Earth is Proxima Centauri, which is 14% the size of the Sun, 12% of its mass, and about 4.2 light-years away. Traveling there by plane would take approximately 4.6 million years. If you closely observe the twinkling stars in the night sky, you'll notice that they have various colors. A star's color depends on its surface temperature: cooler stars appear reddish, while hotter stars are whitish. For example, Betelgeuse (α Ori) is red, the Sun is yellow, and Sirius (α CMa), the brightest star in the night sky, is bluish white. Fig. 1.2. Stars exhibit a variety of colors A star’s mass determines its nuclear fusion rate, which in turn governs its luminosity and lifespan. More massive stars consume their fuel faster than less massive stars. Stars end their lives as white dwarfs, neutron stars, or black holes. Stars with core masses less than 1.4 solar masses become white dwarfs, those with core masses between 1.4 and 3 solar masses become neutron stars and explode as supernovae, and those with core masses greater than 3 solar masses become black holes after passing through a neutron star stage. The remnants of supernova explosions can be recycled to form new stars. Typically, fewer than a hundred stars are visible to the naked eye in a city, and about a thousand in the countryside under ideal conditions. Most of these stars lie within 50 light-years from Earth. iii. Our Galaxy (Milky Way) The Milky Way is a barred spiral galaxy containing between 200 and 400 billion stars, along with vast amounts of gas, dust, and dark matter. Its diameter spans approximately 100,000 light-years, while its thickness is about 1,000 light-years, making it a relatively flat and disk-like structure with a central bulge. The Sun is situated roughly 26,000 light-years from the galactic center, orbiting it once every 220 million years, a period known as a galactic year. Our solar system resides near the Orion Spur, a minor arm located between the Sagittarius and Perseus spiral arms. Positioned about 60 light-years above the galactic plane, this location provides an advantageous perspective for observing the universe in multiple directions with minimal obstruction from the dense dust and gas within the galactic disk. Fig. 1.3. Our Galaxy (Milky Way) iv. Galaxies, Cluster of Galaxies, and Superclusters The Andromeda Galaxy (M31) is the closest galaxy to the Milky Way, located about 2.5 million light-years from Earth. It is visible to the naked eye from the Northern Hemisphere (visual magnitude = 3.4) and has a shape similar to that of the Milky Way. The Andromeda Galaxy is approaching the Milky Way at a speed of about 110 km/s and is expected to collide with it in about 4 billion years. Galaxies can be broadly categorized into three main morphological classes: spiral, elliptical, and irregular. When two spiral galaxies collide, their gravitational interactions can lead to a dramatic transformation, often resulting in the formation of an elliptical galaxy. This process typically unfolds through stages involving interacting galaxies, followed by a luminous infrared galaxy (LIRG) or ultraluminous infrared galaxy (ULIRG) phase. Fig. 1.4. Spiral galaxy, elliptical galaxy, and irregular galaxy If fewer than 50 galaxies are gravitationally bound, they are called a "group of galaxies," and if hundreds or thousands are bound, they are called "clusters of galaxies." More than 40 nearby galaxies, including the Milky Way and Andromeda, belong to the Local Group. The Local Group and the Virgo Cluster are part of the Virgo Supercluster, which in turn is part of the Laniakea Supercluster. A supercluster complex, also known as a galactic filament or supercluster chain, is an immense large-scale structure in the universe, composed of numerous galaxy superclusters that are interconnected by vast networks of galaxies, gas, and dark matter. These interconnected regions form a web-like pattern and represent the largest structures known to exist in the cosmos. They span incredible distances, ranging from hundreds of millions to billions of light-years across, dwarfing smaller cosmic structures. Among these, the Hercules–Corona Borealis Great Wall stands out as the largest known supercluster complex, an awe-inspiring testament to the scale of the universe. In the observable universe, there are an estimated 200 billion galaxies, spread across a staggering distance of approximately 93 billion light-years, each contributing to the intricate tapestry of cosmic structures. Fig. 1.5. Nearby superclusters (yellow color: Laniakea supercluster) b. Creation of the Universe How did the universe begin? Has it always existed, or was it created by God? To explore this topic, we will examine the origin of the universe as observed in astronomy and as described in the Book of Genesis in the Bible. i. Creation of the Universe in Astronomy The most widely supported theory about the origin of the universe is the Big Bang Theory, which posits that the universe began approximately 13.8 billion years ago as an incredibly hot and dense point that rapidly expanded. This naturally raises the intriguing question: "What existed before the Big Bang?" One leading hypothesis asserts with growing support that prior to the Big Bang, the universe existed in a state of quantum fluctuations within a vacuum, a dynamic and probabilistic foundation from which our universe emerged. Before Paul Dirac, the vacuum was thought of as empty space with nothing in it. In 1928, Dirac combined quantum mechanics and special relativity to describe the behavior of an electron at relativistic speeds. Interestingly, the equation suggested two solutions for the electron: one for an electron with positive energy, and one for an electron with negative energy. Dirac proposed that the vacuum is not an empty space but filled with an infinite number of electrons with negative energy (positron). Because of this, vacuum is sometimes called Dirac Sea. Fig. 1.6. 3-D model of quantum fluctuations in a vacuum Although the Dirac Sea appears to be static, it is never static because of Heisenberg's uncertainty principle. Particle and antiparticle pairs spontaneously appear (pair-production) and disappear (pair-annihilation) in a random fashion. The time scale is 10-21 seconds and invisible to the human eye, but if there is a camera that can capture it, it will be like looking at a fluctuating sea. This is what is called "quantum fluctuation." The Big Bang emerged from the sea of quantum fluctuation at a singular point. The Big Bang itself is the beginning of the universe. Immediately after the Big Bang, the universe underwent rapid changes due to its extremely high temperature and density. From 10-43 seconds (Planck time) to 10-36 seconds, the universe was governed by Grand Unification Theory where three forces (strong, weak, electromagnetic forces) in Standard Model are unified. The universe underwent inflationary epoch from 10-36 seconds to 10-32 seconds, electroweak epoch from 10-32 seconds to 10-12 seconds, quake epoch from 10-12 seconds to 10-6 seconds, hadron epoch from 10-6 seconds to 1 second, and lepton epoch from 1 seconds to 10 seconds. At the end of the lepton epoch, a dramatic and pivotal event occurred. The lepton and antilepton pairs, primarily consisting of electrons and positrons, underwent mutual annihilation. This process released an immense number of photons (light particles), effectively flooding the universe with light. These photons became the dominant form of energy in the cosmos, marking the beginning of what is known as the photon epoch. This era, lasting from about 10 seconds to 380,000 years after the Big Bang, was characterized by a hot, dense plasma of free electrons, nuclei, and photons. During this time, photons were scattered by free electrons and protons, preventing them from traveling freely and making the universe opaque. The recombination epoch followed at the end of photon epoch, where another important event happened. Electrons combine with protons to form neutral hydrogen and helium. This is the start of the matter-dominated era. When this happened, the plasma-filled universe gradually became transparent and transformed into space what we can call the sky. When this happens, photons produced during the photon epoch but previously confined by plasma can now move freely around the transparent universe. These freely moving photons are observed as very bright light and form cosmic microwave background radiation. The stars and galaxies we see today were formed from the atoms created during the recombination epoch. Since then, the universe has continued to expand in the aftermath of the Big Bang. When the universe was 9.8 billion years old, dark energy began to dominate, marking the start of the dark energy-dominated era. In this era, the universe continues to expand at an accelerated rate. This accelerated expansion is the current state of the universe. ii. The Fate of the Universe (Big Bang Again?) The fate of the universe depends on its overall density. According to measurements from WMAP, the current density of the universe is approximately equal to the critical density (about 10–29 g cm-3) within a margin of error of 0.5%. However, this uncertainty means we cannot yet definitively determine the universe's ultimate fate until more precise measurements are obtained. If the universe's density is greater than the critical density, gravitational forces will eventually overcome the expansion, causing the universe to collapse back into itself in a catastrophic event known as the Big Crunch, characteristic of a closed universe. Conversely, if the density is less than the critical density, the universe will continue to expand forever at an accelerated rate, leading to a scenario known as the Big Rip, characteristic of an open universe. In this case, the universe's temperature will gradually cool as expansion progresses, and star formation will eventually cease due to the depletion of the interstellar medium necessary for star creation. Over time, the universe will become increasingly dark and cold, a process often referred to as "heat death." Existing stars will run out of fuel and stop shining. Subsequently, proton decay follows as predicted by Grand Unified Theory when the age of the universe is around 1032 years. Around 1043 years, black holes will start to evaporate via Hawking radiation. After all baryonic matters have decayed and all black holes have evaporated, the universe will be filled with radiation. The temperature of the universe will cool down to absolute zero and all is dark and empty, resembling the state of the universe undergoing quantum fluctuations before the Big Bang. Fig. 1.7. Fate of the universe and evaporating black hole Recently, two cosmic megastructures were discovered 7 billion light-years away from Earth in the direction of the Big Dipper. The Giant Arc, discovered in 2022, and the Big Ring, discovered in 2024, challenge the cosmological principle that states the universe is homogeneous and isotropic on a large scale. These megastructures require a proper explanation. One possible explanation is that they are huge cosmic strings or remnants from the Hawking evaporation of supermassive black holes (Hawking points) from the previous Big Bang. This interpretation is related to Roger Penrose's Conformal Cyclic Cosmology (CCC). The CCC is a cosmological model based on general relativity, in which the universe expands forever until all matter decays and leaves black holes. In CCC, the universe iterates through infinite cycles, with a new Big Bang emerging within the ever-expanding current Big Bang. Fig. 1.8. Big Ring (blue) and Big Arc (red) Personally, I find the CCC appealing because it offers potential solutions to some problems in galaxy evolution. There exists a correlation between the mass of a black hole and the stellar velocity dispersion (the M-sigma relation). According to this relationship, the mass of a black hole is about 0.1% of the mass of its galaxy. Recently, Chandra and JWST discovered an intriguing galaxy, UHZ1, via gravitational lensing. UHZ1 is at a distance of 13.2 billion light-years, seen when our universe was only about 3 percent of its current age. The estimated black hole mass of UHZ1 turned out to be larger than that of the host galaxy. This large black hole mass cannot be explained by current black hole mass theories but can be by the CCC. This can be understood if the black hole in UHZ1 was a recycled black hole from the previous Big Bang and became a seed black hole in UHZ1 during the current Big Bang. We do not know how the new Big Bang occurs while the current Big Bang is still expanding. We could try using the concept of hyperspace. In this scenario, the universe is expanding into three-dimensional space. However, imagine our three-dimensional universe as a surface embedded in a higher-dimensional space (hyperspace). This higher-dimensional space could be a four-dimensional space (or more) where our entire universe is just a "slice" or a "brane." As our universe continues to expand, it might eventually converge to a singular point in this higher-dimensional hyperspace, much like how a two-dimensional surface can curve and converge at a point in three-dimensional space. This point in hyperspace could be analogous to the neck of a Klein bottle, a higher-dimensional shape where the surface loops back on itself. When the universe's expansion in three-dimensional space converges to this singular point in hyperspace, it could create conditions where the energy density becomes extremely high. If this singular point in hyperspace cannot accommodate the immense energy and vacuum energy influx from the current expanding universe, it could result in an explosion. This explosion would be the start of a new Big Bang, creating a new universe. In this way, the ever-expanding current Big Bang universe could lead to the formation of a new universe within the hyperspace framework, with the convergence to a singular point acting as the bridge between cycles of the CCC. This higher-dimensional convergence provides a mechanism for continuous cycles of the Big Bangs while the current universe is still expanding, and this expanding universe's energy could also contribute to the dark energy driving its acceleration. Fig. 1.9. Conformal Cyclic Cosmology iii. The Creation of the Universe in the Bible In this section, I will explore the creation of the universe as described in the Bible from an astronomical perspective, examining how the Biblical account might align with modern scientific understanding. This analysis will delve into the possible parallels between the scriptural account and astronomical observations. While this approach provides an interesting perspective, it is important to recognize that there are other ways to interpret the creation account in the Bible. These interpretations can vary based on theological, philosophical, and cultural contexts, each providing unique insights into the profound narrative of the universe's origins. a) God declared the creation of the universe The creation of the universe is described in Genesis, the first book of the Bible. “In the beginning, God created the heavens and the Earth. " (Genesis 1:1) This verse introduces the act of creation by God, asserting that He is the initiator of everything that exists. The phrase "the heavens and the Earth" encompasses all of creation, indicating the totality of the universe. " The earth was without form and void, and darkness was over the face of the deep. And the Spirit of God was hovering over the face of the waters." (Genesis 1:2) The term "earth" here represents the physical, material creation (i.e., baryonic matter) that God would later shape. The phrase "The earth was without form" can be interpreted as describing a primordial state of emptiness, in which nothing had yet been created. The term "void" signifies an empty space, and if there is nothing within that space, it can legitimately be called a vacuum. Therefore, the phrase "The earth was without form and void" suggests that, from the very beginning, the universe existed as a vacuum, an initial state of nothingness. The next phrase ‘darkness was over the face of the deep’ has a profound meaning. The ‘darkness’ is חֹשֶׁך (choshek) in Hebrew and means literally total darkness without any light. The ‘deep’ is תְּהוֹם (tehom) in Hebrew and was derived from הום (hom) meaning ‘uproar’ or ‘fluctuate’. Thus, "The Earth was without form and void, and darkness was over the face of the deep" can be interpreted as describing the origin of the

universe from a vacuum in a state of darkness and fluctuation. This interpretation aligns closely with the condition of the universe at its earliest stage—just before the Big Bang—when it existed as a vacuum undergoing quantum fluctuations.

b) The creation of light The main event on the first day of creation is the creation of light. "And God said, “Let there be light,” and there was light." (Genesis 1:3) The verse states that God initiated the creation of the universe by creating light. Similarly, the Big Bang began with a series of rapid epochs, which altogether lasted less than a second, ultimately leading to the creation of light (photons) during the photon epoch. The creation of light in Genesis 1:3 corresponds remarkably with the creation of light during the photon epoch—powerfully aligning the biblical account with this pivotal moment in the early universe. c) The Creation of Sky The main event on the second day of creation is the creation of sky (heavens). "And God made the vault and…, God called the vault sky…." (Genesis 1:7, 8) The creation of the sky described in Genesis can be correlated with the recombination epoch in Big Bang cosmology. Before this epoch, the universe was opaque, filled with a dense, hot plasma of electrons, neutrons, protons, and photons. This plasma scattered photons, preventing them from traveling freely and making the universe opaque to radiation. During this time, the universe was about 10 light-years across, meaning there was no clear space for a visible "sky." However, in the recombination epoch, the universe cooled sufficiently for electrons and protons to combine and form neutral hydrogen atoms. This process cleared the plasma, making the universe transparent and allowing photons to travel freely through space. As a result, a vast, transparent expanse—what we recognize as the visible sky—came into existence, with a radius of about 42 million light-years. Thus, the creation of the sky in Genesis 1:7-8 can be interpreted as a reference to this pivotal event in cosmic history. The following table summarizes the creation of the universe as described in the Bible and as explained by astronomy. The comparison shows that the creation account in Genesis aligns with astronomical facts to a remarkable degree, affirming that God had already revealed these truths through the Bible long before they were discovered by science. Genesis Astronomy Vacuum fluctuation (Gen 1:2 – before Creation) Vacuum fluctuation (before Big Bang) Creation of light (Gen 1:3 – Creation Day 1) Creation of light (Photon epoch) Creation of the sky (Gen 1:7-8 – Creation Day 2) Creation of the sky (Recombination epoch) Table 1.1. Comparison of Creation in Genesis and Astronomy c. Which was Created First, the Earth or the Sun? The main event on the third day of creation in Genesis is the creation of dry land and sea. This can be understood as the period during which the Earth was formed and structured. The process of gathering water and revealing dry land signifies the development of the Earth's surface and geographical features. The main event on the fourth day in Genesis is the creation of the Sun. Thus, the Earth was created before the Sun. It will be interesting to exam whether the Biblical account is consistent with astronomical observations. Let's explore it. Stars and planets are formed from molecular clouds. Molecular clouds are made up of about 98% gas (about 70% hydrogen and 28% helium) and 2% dust (carbon, nitrogen, oxygen, iron, etc.). Most of the stars and Jovian planets are made of gas, and most of the terrestrial planets are made of dust. Protostars are formed when molecular clouds collapse under their own gravity. During this process, the remaining material from the molecular clouds forms a rotating disk known as a protoplanetary disk, which is the region where planets eventually take shape. The gravitational collapse initiates the heating and compression of the core, leading to the birth of a protostar, while the surrounding spinning disk provides the environment for the formation and evolution of planetary bodies. As the protostar continues to contract, it becomes a pre-main-sequence star and follows the stellar evolution tracks known as the Hayashi track (for low-mass stars) and the Henyey track (for high-mass stars) in the Hertzsprung-Russell diagram (H-R diagram). The pre-main sequence stars can be observed as T Tauri stars if their mass is smaller than 2 solar masses, and as Herbig Ae/Be stars if their mass is larger than 2 solar masses. The pre-main sequence star continues to contract until its internal temperature rises to 10 to 20 million degrees. At this point, the pre-main sequence star starts hydrogen nuclear fusion and becomes a true star in the sky. Stars in this stage are called main sequence stars. According to stellar evolution theory and helioseismology studies, the Sun stayed in the pre-main sequence stage for about 40 to 50 million years, after which it became a main sequence star. Fig. 1.10. Protostar and protoplanetary disk, and H-R diagram While the star is forming in the center, planets are forming in the protoplanetary disk. Collisions of dust particles and gas form pebbles, pebbles grow into rocks, and rocks develop into planetesimals. These planetesimals are building blocks of planets. Only recently have the details of the planet formation process in the protoplanetary disk been actively studied. Studies predict that it will take a few million years to form an Earth-sized planet from 1 mm-sized pebbles. This prediction can be tested with actual observations, including ALMA sub-millimeter images of T Tauri stars HL Tau and PDS 70. The mass of HL Tau is approximately two solar masses, and its age is about one million years. The image reveals that several planets have already formed and are orbiting the central pre-main sequence star, as indicated by the gaps in the protoplanetary disk. The mass of PDS 70 is about 0.76 solar masses, and its age is about 5.4 million years old. Two exoplanets, PDS 70b and PDS 70c have been directly imaged by ESO VLT. In 2023, spectroscopic observations by the James Webb Space Telescope detected water in the terrestrial planet-forming region of the protoplanetary disk and suggested that two or more terrestrial planets have formed inside. It is important to note that the gas and dust clouds seen in HL Tau were largely removed in PDS 70, and terrestrial planets containing water have formed in the center. It took 5.4 million years to form terrestrial planets, but even if it took 10 million years, it would still be much less than the 40 million to 50 million years for the Sun to become a main sequence star. This suggests that the Earth was created earlier than the Sun, as stated in Genesis, and is consistent with astronomical observations. Fig. 1.11. HL Tau and PDS 70 Another main event God performed on the third day was the creation of plants and trees. Atheists and evolutionists often ask how these plants and trees could have survived if the Sun was created on the fourth day. This question can be addressed within the context of stellar evolution theory. When the Earth was formed, the Sun was still in the T Tauri star stage. Although T Tauri stars are not main-sequence stars, their surface temperature ranges between 4,000 to 5,000 Kelvin. Blackbody radiation at these temperature peaks in the visible wavelength. Furthermore, the size of the Sun as a T Tauri star was several times larger than its current size. Therefore, it could provide sufficient energy in the visible wavelength range to enable photosynthesis in plants and trees. d. Is the Earth 6,000 Years Old? The ‘young Earth creationism’ is the belief that the Earth and the universe are relatively young, typically around 6,000 to 10,000 years old, based on a literal interpretation of the Bible's creation account in Genesis. Young Earth creationists believe that the Earth was created in six 24-hour days and reject much of modern scientific consensus regarding the age of the Earth and the universe. Extensive scientific evidence from various fields, including geology, astronomy, and physics, indicates that the Earth is approximately 4.6 billion years old, and the universe is about 13.8 billion years old. Despite this ample evidence, the young Earth creationists do not agree. This situation is reminiscent of the debate between geocentric and heliocentric models in the days of Galileo Galilei. Before delving into the main discussion, let's consider a few examples that make it easy to understand that the Earth and the universe are at least several million years old. The Earth's crust is composed of tectonic plates that move slowly, causing earthquakes. No one would deny this fact. A hot spot is a point where magma flows out from deep within the mantle beneath the crust, with its center fixed in place. When magma flows out onto the crust and cools, it forms land. The Hawaiian Islands are a prime example of this process. On the Big Island of Hawaii, Kilauea is still an active volcano, and as the magma it erupts cools in the seawater, new land is formed. The newly formed land moves northwest at a rate of about 7-10 cm per year due to plate tectonics, and this process has created the various islands of Hawaii. This is happening even now, and it is an undeniable fact. Considering the speed at which the tectonic plates move, the ages of the Hawaiian Islands are estimated as follows: the Big Island is 400,000 years old, Maui is 1 million years old, Molokai is 1.5-2 million years old, Oahu (where Waikiki is located) is 3-4 million years old, and Kauai is about 5 million years old. In the Big Island, one can see that much of the land is still covered in black volcanic soil, indicating minimal weathering. In contrast, Kauai has undergone significant weathering, allowing vegetation to flourish, earning it the nickname "The Garden Isle." This example provides direct evidence that the Earth is at least several million years old. Fig. 1.12. Geologic history of the Hawaiian Islands To directly understand that the universe is at least several million years old, one only needs to accept that light travels at 300,000 km per second. The Sun is 150 million km away from Earth. So, the sunlight we receive now was generated on the Sun 8.3 minutes ago. The Sun is about 400 times larger than the Moon, but because it is much farther away, it appears to be about the same size as the Moon in the sky. No one would deny this. The Andromeda Galaxy is similar in size to our Milky Way but is 2.5 million light-years away, making it appear about four times the size of the Moon. The fact that we can see the Andromeda Galaxy means that the light we are observing created in Andromeda 2.5 million years ago and has just now reached us. If you have seen the Andromeda Galaxy, you cannot deny this fact. This is direct evidence that the universe is at least several million years old. Despite these facts, if one still insists that the Earth is 6,000 years old, it could become a stumbling block rather than aid in spreading the gospel, potentially distancing many people from it. Therefore, instead of advocating for young Earth creationism, it might be more reasonable to carefully read Genesis in the Bible and try to find a solution. For humans, time always flows from the present to the future and never flows backward. We define one day as 24 hours, but if we were created on other planets, a day would not be 24 hours. For example, if we were created on Venus, one day would be 243 Earth days, and on Jupiter, one day would be 10 Earth hours. Therefore, unless we change our definition and perception of time from a geocentric perspective, it will be difficult to address this issue. Let’s discuss this further with these facts in mind. i. The Days in Genesis First, let's estimate the age of the universe based on the records in Genesis. According to Genesis, God created the universe and everything in it over six days. The time elapsed from Adam to Noah can be estimated using the genealogical records in Genesis 5:3–32. Noah’s flood occurred when Noah was 600 years old, and the total number of years from Adam to the flood is 1,656 years. We do not know when Noah’s flood occurred. Some biblical scholars and traditions attempt to date the flood using genealogies in the Bible, estimating it occurred around 2300–2400 BC. Therefore, the age of the universe, according to this interpretation, is 7 days + 1,656 years + 4,400 years = 6,056 years. This is the theoretical basis of young Earth creationists' claim that the Earth is 6,000 years old. To address the day-age problem, let's take another look at Genesis. While there seems to be no issues with the genealogical records in Genesis, some debate might exist regarding the exact year of Noah’s flood. However, whether Noah's flood occurred 4,400 years ago or 44,000 years ago, it does not significantly affect the age of the universe as understood in the scientific context of 13.8 billion years. So, where is the key to resolving the day-age problem? Perhaps you have already noticed—the key lies in the interpretation of the first seven days of creation. Fig. 1.13. To define a day, the Earth and Sun must exist beforehand. The reason is simple: a day is defined as the rotation period of the planet we live on. To define a day, both the Sun and the Earth must exist beforehand. However, Genesis records that the Earth was created on the third day, and the Sun on the fourth day, yet God used the terms "day" and "night" even before their creation. This implies that the "day" in Genesis is not the 24-hour day as we define it, but a "day" as defined by God. The fallacy of young Earth creationists lies in their misunderstanding that the "day" mentioned in Genesis refers to a literal 24-hour human day, leading to a misinterpretation of the term "day" in the Genesis account. If the days in Genesis are not the 24-hour periods as defined by humans, you might wonder "How long are the days in Genesis in terms of human days?". While we do not know the exact answer, we can estimate an approximate period by comparing the creation events described in Genesis with those of the Big Bang. The main event on the first day of creation is the creation of light. The photon epoch in the Big Bang corresponds to this event, with the human time of the first day being 380,000 years. The main event on the second day of creation is the creation of the sky. The recombination epoch corresponds to this event, with the human time of the second day being 100,000 years. The main event on the third day is the creation of the Earth.

As we saw in the previous section, it takes about 10 million years for Earth to form, so the third day of creation would have been over 10 million years long.

Similarly, the main event on the fourth day is the creation of the Sun. Since it takes roughly 40 to 50 million years for the Sun to form, the fourth day of creation would have been over 40 million years long.

The following table summarizes the above results.

Table 1.2. Days of Creation in Genesis Interpreted in Human Time Here, we notice some unexpected facts about the concept of time as used by God. The days in the creation account are much longer compared to a human day of 24 hours. Furthermore, God's time is not fixed but varies, ranging from hundreds of thousands of years to more than 40 million years. How can we understand this? In some sense, this is not a surprising result but an expected one. ii. The Creator of Time The "day" used in Genesis is yom (יום) in Hebrew. Yom can be interpreted in several ways, including one that refers to age or a long period of time. This interpretation suggests that each "day" of creation represents a lengthy epoch during which specific acts of creation took place. Another interpretation is that "yom" signifies a period of indeterminate length. This view posits that God's days are not bound by human time constraints, acknowledging that God, as the creator of time, operates outside of our temporal limitations. Examples of this interpretation can be found in the Bible. In 2 Peter in the New Testament, it is written: "But do not forget this one thing, dear friends: With the Lord a day is like a thousand years, and a thousand years are like a day." (2 Peter 3:8) This passage is meant to encourage those who wait for God's promises to do so patiently. It may also suggest that God's perspective on time differs from that of humans, implying that God can expand or contract time as He wills. We understand that time is not a fixed quantity. According to special relativity, time moves more slowly for the moving observer than the observer at rest in the same inertial frame ( Fig. 1.14. Illustration of time dilation God not only expand or contract but also stop time. In the Old Testament book of Joshua, it is written: "The Sun stopped in the middle of the sky and delayed going down about a full day” (Joshua 10:13). This miracle occurred during Joshua's battle with the Amorites and demonstrates that God has the power to freeze time. Furthermore, God performed an even more astonishing miracle, as recorded in 2 Kings of the Old Testament: "Then the prophet Isaiah called on the LORD, and the LORD made the shadow go back the ten steps it had gone down on the stairway of Ahaz.” (2 Kings 20:11) The verse above reflects God’s response to King Hezekiah’s tearful prayer for a longer life. In His mercy, God heard Hezekiah and granted him 15 additional years. To confirm His promise, God performed a miraculous sign, causing the shadow on the stairway of Ahaz (sundial) to move backward by ten steps. This miracle indicates that God has the power to reverse time, a concept that is beyond the scope of our current scientific understanding. Fig. 1.15. Stairway of Ahaz (Sundial) For humans, time flows unidirectionally from present to future, but for God, as shown in the Bible, time is a variable He can control. God can shorten, extend, freeze, or even reverse time, demonstrating His sovereignty over natural laws and highlighting the contrast between human limitations and His infinite power. e. The Fine-tuned Universe The fine-tuned universe expresses the fact that the fundamental physical constants that make up and operate the universe are finely turned with extreme precision for life to exist in the universe. If the density of the universe had been greater than the critical density, the universe would have contracted immediately after its formation. Conversely, if it had been smaller than the critical density, the universe would have expanded too rapidly, preventing the formation of stars and galaxies. In either case, we would not exist in this world. In his book The Emperor's New Mind, Penrose used the Bekenstein-Hawking formula for black hole entropy to estimate the odds at the Big Bang. He calculated that the likelihood of the universe coming into existence in a way that would develop and support life as we know it is 1 in 10 to the power of 10123. This suggests that our universe did not arise from a random chance or process but through extraordinary fine-tuning by the divine Creator! The fundamental constants of physics like gravitational constant, vacuum speed of light, Planck's constant, Boltzmann's constant, electric constant, elementary charge, and fine-structure constant, etc. must be fine-tuned for the life to exist in the universe. If these constants were even slightly different, the universe would be unable to support life. For example, if the gravitational constant were smaller than it is now, the force of gravity would be weaker. This reduced gravitational pull would make it impossible for matter to coalesce into stars, galaxies, and planets, including Earth we live on today. If Planck's constant were larger than it is now, several fundamental changes in the physical universe would occur. Firstly, the intensity of solar radiation would decrease, leading to less energy reaching the Earth from the Sun. This reduction in energy would impact many natural processes, including climate and weather patterns. Additionally, larger Planck's constant values would increase the size of atoms, as the quantization of atomic energy levels would change. This increase would weaken the bonding strength of atoms and molecules, making chemical reactions less stable. Photosynthesis in plants, which relies on the precise absorption of light energy to convert carbon dioxide and water into glucose, would become less efficient. The overall biochemical and physical processes that depend on the current balance of quantum mechanics would be altered, resulting in a dramatically different and less stable environment for life. Among the fundamental constants, the fine-structure constant has attracted special attention to physicists. The fine-structure constant, denoted by Greek letter It is a dimensionless quantity with an approximate value of 1/137, a figure that has intrigued physicists since its discovery. Its precise value is crucial to the stability of the universe and the existence of life. If it were even slightly different from its current value, life as we know it would not exist. If We do not know the origin of its numerical value If we rewrite the equation of

As mentioned in Chapter 3, “Particle Physics and Creation,” all matter in the universe (baryons) is composed of the fundamental particles described by the Standard Model—quarks, leptons, gauge bosons, and the Higgs boson—altogether numbering 17. Each particle possesses its own unique mass, charge, and spin. If any of these fundamental properties were even slightly different, the atomic, molecular, biological, and cosmic structures we know would not exist.

For example, if the mass difference between up quarks and down quarks were altered, the delicate balance that makes protons stable and neutrons only slightly heavier would be disrupted. In such a case, hydrogen could not form or heavier nuclei could not be synthesized, making atoms impossible. If the electron’s mass were significantly different, atomic sizes and energy levels would shift, and stable chemical bonding would no longer occur, preventing the formation of complex molecules. If the Higgs boson’s properties were changed, the mechanism that gives mass to all elementary particles would be altered, reshaping the very structure of the universe.

Furthermore, if the electric charges of protons and electrons were not exactly equal and opposite, neutral atoms could not exist. If the charges of quarks were different, the properties of protons and neutrons would change, undermining the possibility of atomic nuclei. If electrons did not have a spin of 1/2, the Pauli exclusion principle would not hold, and atoms could not maintain their structure. Likewise, if bosons did not have integer spin values, the quantum field framework that allows forces such as electromagnetism, the strong force, and the weak force to operate would break down. Finally, if the Higgs boson were not a spin-0 particle, the mass-generation mechanism itself would fail, and particles could not exist in their present form.

The fine-tuned universe reflects the astonishing balance and precision that underlie the existence of all things. From the universe’s critical density being set with unimaginable exactness, to Penrose’s calculation of the vanishingly small probability of such initial conditions, to the delicate values of the gravitational constant, Planck’s constant, and the fine-structure constant, every detail points to a cosmos that is exquisitely calibrated for life. Even the fundamental particles themselves—quarks, leptons, bosons, and the Higgs—possess precisely the right masses, charges, and spins to permit atoms, molecules, stars, and ultimately living beings to exist. Such harmony cannot reasonably be ascribed to blind chance.

This extraordinary precision not only inspires awe but also compels us to ask deeper questions about the universe’s origin and purpose. The seamless interplay of physical laws bears the mark of intentional design, and the concept of divine creation offers a profound and compelling explanation. Just as an orchestra produces a beautiful symphony only when every instrument is perfectly tuned, so too does the universe testify to the wisdom and power of the Creator, who has ordered all things with purpose and meaning.

If those who merely discovered the fundamental principles of the universe—gravity, relativity, the uncertainty principle, Pauli’s exclusion principle, and the Higgs mechanism—are honored as geniuses and awarded Nobel Prizes, how much greater is God, the Creator who not only conceived these laws and principles but also brought the entire universe into being?

Day in Creation

Event in Genesis

Event in Astronomy

Human Time

Day 1

Creation of the light

Creation of the light in photon epoch

380,000 years

Day 2

Creation of the sky

Creation of the sky in recombination epoch

100,000 years

Day 3

Creation of the Earth

Creation of the Earth

> 10 million years

Day 4

Creation of the Sun

Creation of the Sun

> 40 million years

). In general relativity, time passes more slowly in a strong gravitational field (

). In general relativity, time passes more slowly in a strong gravitational field ( ).

). quantifies the strength of the electromagnetic interaction between elementary charged particles.

quantifies the strength of the electromagnetic interaction between elementary charged particles.

were greater than 1/137, the electromagnetic interaction between particles would become stronger. This would result in electrons being more tightly bound to the nucleus, reducing the size of atoms and making the formation of heavy elements easier, while light elements such as hydrogen would be less likely to form. Since hydrogen is a crucial raw material for nuclear fusion, this change would directly affect the survival of life by limiting the availability of hydrogen needed for energy production in the Sun and stars. Conversely, if

were greater than 1/137, the electromagnetic interaction between particles would become stronger. This would result in electrons being more tightly bound to the nucleus, reducing the size of atoms and making the formation of heavy elements easier, while light elements such as hydrogen would be less likely to form. Since hydrogen is a crucial raw material for nuclear fusion, this change would directly affect the survival of life by limiting the availability of hydrogen needed for energy production in the Sun and stars. Conversely, if  were smaller than 1/137, the electromagnetic interaction between particles would become weaker. Electrons would be less tightly bound to the nucleus, leading to unstable atoms and molecules. Such instability would cause atoms and molecules to decay more easily, preventing the formation of complex molecules like DNA and proteins, which are essential for life. Thus, any significant change in the fine-structure constant would have profound implications for the formation of matter and the potential for life in the universe.

were smaller than 1/137, the electromagnetic interaction between particles would become weaker. Electrons would be less tightly bound to the nucleus, leading to unstable atoms and molecules. Such instability would cause atoms and molecules to decay more easily, preventing the formation of complex molecules like DNA and proteins, which are essential for life. Thus, any significant change in the fine-structure constant would have profound implications for the formation of matter and the potential for life in the universe. ≈ 1/137. Dirac considered the origin of

≈ 1/137. Dirac considered the origin of  to be "the most fundamental unsolved problem of physics". Feynman described

to be "the most fundamental unsolved problem of physics". Feynman described  as a “God's Number” or "magic number" that shapes the universe, and that comes to us without understanding. You might say the "hand of God" wrote that number, and "we don't know how He pushed His pencil."

as a “God's Number” or "magic number" that shapes the universe, and that comes to us without understanding. You might say the "hand of God" wrote that number, and "we don't know how He pushed His pencil." , it can represent several ratios: the velocity of electrons to the speed of light (i.e., light travels 137 times faster than the electrons), electrostatic repulsion to the energy of a single photon, and the classical electron radius to the reduced Compton wavelength of the electron. Additionally, the ratio of the strengths of the electromagnetic force to the gravitational force is 1036, and the ratio of the electromagnetic force to the strong force is 1/137. Thus, the numerical value of the dimensionless constant

, it can represent several ratios: the velocity of electrons to the speed of light (i.e., light travels 137 times faster than the electrons), electrostatic repulsion to the energy of a single photon, and the classical electron radius to the reduced Compton wavelength of the electron. Additionally, the ratio of the strengths of the electromagnetic force to the gravitational force is 1036, and the ratio of the electromagnetic force to the strong force is 1/137. Thus, the numerical value of the dimensionless constant  could serve as a reference point for the four fundamental forces.

could serve as a reference point for the four fundamental forces.

2. God's Masterpiece: the Earth (How special is Earth in the universe?)

The Earth we live on provides several fine-tuned conditions essential for the survival of living organisms. These conditions are so precise that they often serve as an extension of the fine-tuned universe.

In this context, we will explore ten special conditions of Earth that are particularly unique and crucial for supporting life as we know it. These conditions highlight the extraordinary balance and precision required to sustain living organisms, making our planet an exceptional oasis in the vast expanse of the universe. By examining these unique attributes, we can gain a deeper appreciation for the intricate interplay of factors that enable life to thrive on Earth.

a. Right Distance from the Sun

The presence of liquid water is crucial for life. To have liquid water, a planet must orbit within a specific region around its central star. If the planet is too close to the star, all the water will boil away, and if it is too far, all the water will freeze. The range of orbits where water neither boils nor freezes is called the ‘habitable zone’. The estimated habitable zone in our solar system is between 0.95 AU and 1.15 AU (1 AU is the distance from Earth to the Sun). Thus, if Earth were 5% closer or 15% further away from the Sun, we would not be here.

The percentage of the habitable zone occupying the ecliptic plane stretched to Neptune (30 AU) is only 0.05%. The eccentricity of Earth’s orbit is another important factor affecting the range of the habitable zone. For example, if the eccentricity were larger than 0.5, all water would boil twice a year near perihelion and freeze twice a year near aphelion. Fortunately, Earth’s eccentricity is only 0.017, resulting in an almost circular orbit.

Fig. 2.1. Habitable zone (green) in the solar system

b. The Right Axial Tilt

The rotation axis of the Earth is tilted at about 23.5 degrees. Because of this, we can have four seasons and mild weather. What will happen if the rotation axis is not tilted (0 deg, cf. axial tilt in Mercury = 0.0 degrees) or completely tilted (90 deg, cf. axial tilt in Uranus = 82.2 degrees)?

If Earth's rotation axis were not tilted, several significant changes would occur in terms of climate, seasons, and habitability. The equator would receive constant, direct sunlight year-round, leading to perpetually hot temperatures. Conversely, the poles would always receive minimal sunlight, resulting in perpetual cold. This drastic temperature contrast would significantly affect global climates and weather patterns.

The absence of seasons would have profound impacts on ecosystems and agriculture. Regions near the equator might become too hot for many crops and organisms to thrive, while the polar regions would remain inhospitably cold. The middle latitudes would become the primary habitable zones, but even these areas would lack the seasonal variations that many plants and animals rely on for life cycles and reproduction.

Human societies would face serious challenges, including reduced agricultural productivity and increased pressure on habitable land. The lack of seasonal cues could also disrupt cultural and economic activities that depend on the changing seasons. Overall, a non-tilted Earth would lead to a less dynamic and less hospitable environment for life.

Fig. 2.2. Earth’s axial tilt. No tilt (left) and 90 degrees tilt (right)

If Earth's rotation axis were completely tilted to 90 degrees, it would have profound and dramatic effects on the planet's climate and environment. In this scenario, one hemisphere would experience continuous daylight for half the year while the other would be in constant darkness, and then the situation would reverse for the other half of the year.

Each hemisphere would undergo extreme seasonal variations. During its summer, one hemisphere would receive constant sunlight, leading to prolonged periods of intense heat and potentially desert-like conditions. Conversely, during its winter, the same hemisphere would experience continuous darkness and freezing temperatures.

The drastic changes in light and temperature would severely disrupt ecosystems. Many plants and animals are adapted to the current seasonal cycle, and such extreme changes would threaten their survival.

Agriculture, which relies on predictable seasons, would be significantly affected. Regions currently suitable for farming might become uninhabitable, leading to food shortages and the need for major adaptations in agricultural practices.

Overall, a completely tilted axis would make Earth much less hospitable for life, creating extreme and unstable environmental conditions.

c. The Right Rotation and Orbital Periods

The rotation period of the Earth is 24 hours with about 12 hours day and 12 hours night. Our biorhythm was shaped by the rotation period of the Earth. The 24-hour rotation period provides an optimum time block for 8 hours of work, 8 hours of sleep, and 8 hours of leisure time. However, not all planets in the solar system have an optimum rotation period. For example, the rotation period of Jupiter is about 10 hours whereas Venus is 243 days.

If Earth's rotation period were shortened to 10 hours, it would significantly impact the planet's environment and life. A faster rotation would result in shorter days and nights, causing a rapid alternation between daylight and darkness. This could disrupt the circadian rhythms of many organisms, affecting sleep patterns, feeding behaviors, and reproduction cycles.

The increased rotational speed would also lead to stronger Coriolis effects, intensifying weather patterns and potentially causing more severe storms and hurricanes. The faster rotation could also impact the Earth's tectonic activity. The increased centrifugal force might lead to more frequent and intense earthquakes and volcanic eruptions.

On the other hand, if the Earth's rotation period were 243 days as in Venus, the consequences for the planet and its inhabitants would be drastic. Such a slow rotation would mean extremely long days and nights, each lasting about 120 days.

The side facing the Sun would experience prolonged heating, leading to scorching temperatures, while the side facing away would endure extended darkness and severe cooling, potentially freezing over. These temperature extremes would make it challenging for most forms of life to survive. The prolonged heating and cooling periods would disrupt atmospheric circulation, likely causing extreme weather patterns. Hurricanes, massive storms, and prolonged droughts or floods could become common.

The long periods of daylight and darkness would severely disrupt plant and animal life cycles, affecting photosynthesis, reproduction, and feeding patterns.

Human activities, agriculture, and infrastructure would need significant adaptation to cope with the harsh and varying conditions, posing a tremendous challenge to survival and daily living.

The orbital period of the Earth is also important for human survival. The orbital period of the Earth is 365 days with 3 months each for spring, summer, autumn, and winter. The length of each season is well-balanced, ensuring that no season is too short or too long. This balance is crucial for agricultural cycles, plant growth, the timing of animal migrations, and other ecological processes.

What happens if the Earth has a short orbital period like 88 days, similar to Mercury? In this scenario, each season would last only about 3 weeks. Most crops on Earth require 6 to 9 months from sowing in spring to harvesting in fall. However, with seasons changing every 3 weeks, crops would not have enough time to mature, leading to serious food shortages and directly impacting human survival.

Conversely, what happens if the Earth has a long orbital period like 164 years, similar to Neptune? Each season would last about 40 years. Prolonged summers would lead to extended heat waves and potential desertification, while extended winters would cause long periods of cold and ice, impacting agriculture and ecosystems. While humans might adapt to avoid food shortages, wild animals would struggle to find food during a 40-year-long winter. The prolonged harsh conditions would make it nearly impossible for most wildlife to survive, leading to widespread extinction.

d. The Right Size

You may not have thought about it, but the size of the Earth is crucial for the survival of human beings. The planet's size affects its gravitational pull, which in turn influences everything from the retention of a life-sustaining atmosphere to the ability to support stable bodies of water and maintain a protective magnetic field.

If Earth were half its current size, the gravity would reduce to half of the current gravity. The reduced gravity would have significant and potentially devastating impacts on the planet's ability to support life. The reduced gravity might not be strong enough to retain a dense atmosphere. This thinner atmosphere would offer less protection from harmful solar radiation and meteoroids and might not support the stable weather patterns necessary for life.

The reduced gravity would also affect the retention of liquid water, leading to increased evaporation rates and potentially a loss of surface water over time. This would make it difficult to sustain oceans, rivers, and lakes, which are crucial for supporting diverse ecosystems and human civilization.

Additionally, a smaller Earth would have a diminished magnetic field, offering less protection from the solar wind. This could strip away the atmosphere and further expose the surface to harmful cosmic and solar radiation, making the planet much less hospitable for human beings and other forms of life.

If the Earth were twice its current size, the effects on gravity and escape velocity would be significant and have profound implications for life on the planet. The gravity would increase, making everything on Earth feel heavier, and the escape velocity would also double. This heightened gravity would make movement more strenuous for humans and other organisms, potentially leading to greater physical stress and adaptations over time.

The combination of increased gravity and escape velocity would also impact on the atmosphere. A stronger gravitational pull would retain more gases, including toxic ones like methane and ammonia, similar to the atmospheres of Saturn and Jupiter. These gases could accumulate to harmful levels, creating a toxic environment unsuitable for most life forms.

Additionally, increased gravity could affect geological processes, leading to more intense volcanic activity and higher mountains. Overall, a larger Earth with increased gravity and escape velocity would present significant challenges for the survival of life, potentially resulting in a more hostile and unstable environment.

Fig. 2.3. Comparison of the sizes of the planets in the solar system

e. The Existence of Magnetosphere

Earth is surrounded by a system of magnetic fields known as the magnetosphere, which shields the planet from harmful solar and cosmic radiation. This protective shield is crucial for maintaining life on Earth. To have a magnetosphere, two factors are essential: the proper rotation speed and the existence of a metallic liquid outer core. Fortunately, Earth possesses both. The planet’s rotation induces fluid motions (convection) within the liquid outer core, generating strong magnetic fields that form the magnetosphere.

What would happen if we didn’t have a magnetosphere? If Earth didn’t have a magnetosphere, the consequences for living organisms and the atmosphere would be severe. Without this protective shield, harmful solar and cosmic radiation would bombard the planet, significantly increasing the risk of cancer and genetic mutations in living organisms. Additionally, the magnetosphere helps prevent atmospheric loss by deflecting charged particles from the solar wind. Without it, these particles would strip away the atmosphere over time by sputtering process, depleting essential gases like oxygen and nitrogen. This atmospheric erosion would lead to a thinner atmosphere, reduced surface pressure, and extreme temperature variations, making Earth less hospitable for life.

The strength of the magnetic field on Mars is about 0.01% of that of the Earth. Because of a weak magnetic field, the global magnetosphere could not be formed on Mars and as a result most of the air was removed by sputtering process.

Fig. 2.4. Earth’s magnetosphere deflects harmful cosmic rays

The field lines of the magnetosphere converge at the poles near Arctic and Antarctic, causing a natural weakening of the magnetic field strength. This can result in increased exposure to solar radiation in these areas. The high energy charged particles ionize and excite atoms in the upper atmosphere and produce colorful aurora borealis (northern lights) and aurora australis (southern lights)

f. The Existence of an Exceptionally Large Moon

Earth has an exceptionally large Moon compared to other planets. The large Moon plays an important role in supporting life on Earth. Although its influence is not immediately apparent, it contributes in two fundamental ways. First, the Moon stabilizes Earth’s rotational axis, limiting large variations in axial tilt. Second, it generates tidal forces that are essential for many marine ecosystems.

This section first examines the stabilizing effect of the Moon on Earth’s rotational axis. Earth’s axis is tilted by approximately 23.5 degrees, a configuration that produces regular seasonal variations and supports stable climatic conditions. The long-term stability of this axial tilt is largely due to the gravitational influence of the Moon, which orbits Earth at a relatively close distance.

Among the terrestrial planets in the solar system, Earth is unique in possessing a large moon. The Moon has a radius of approximately 1,740 km. In contrast, Mercury and Venus have no natural satellites. Mars has two small moons, Phobos and Deimos, named after figures in Greek mythology, but their radii are only about 10 km, corresponding to roughly 0.6% of the Moon’s radius.

As discussed earlier, the habitable zone of the solar system is located near 1 astronomical unit (AU) from the Sun. By comparison, the ecliptic plane, along which the planets orbit, extends outward to approximately 30 AU, reaching the orbit of Neptune. Within this context, Earth’s orbit places it relatively close to the Sun. While this proximity is necessary to maintain surface temperatures suitable for life, it also results in a strong gravitational influence from the Sun on Earth’s rotational dynamics.

The destabilizing effect of solar gravity on a planet’s rotational axis can be observed in neighboring planets. Mars, which lacks a large stabilizing moon, provides a well-studied example. As illustrated in the figure below, Mars experiences substantial variations in both its axial tilt and orbital eccentricity due to solar gravitational perturbations. These variations occur with a characteristic period of approximately 150,000 years. Over the past six million years, Mars’s axial tilt has varied between about 15 and 45 degrees, while its orbital eccentricity has ranged from 0.01 to 0.11.

Fig. 2.5. Rotation axis and eccentricity changes in Mars

On Venus, a terrestrial planet similar in size to Earth but lacking a moon, the Sun’s gravitational influence produces large and chaotic variations in the planet’s rotational axis, ranging from approximately 0 to 180 degrees. This raises the question of how Earth’s rotational axis might behave in the absence of its Moon. Although estimates vary among studies, most indicate that over geological timescales Earth’s axial tilt would fluctuate within a range of roughly 0 to 70 degrees. Variations of this magnitude would result in extreme climatic instability, leading to severe and persistent environmental stresses that would greatly challenge the long-term survival of complex life. Fortunately, however, the Creator prepared an exceptionally large moon near Earth, preventing such disasters.

Unlike other terrestrial planets, how did Earth come to possess such a massive moon as its satellite? Several hypotheses have been proposed to explain the Moon’s origin. These include the giant-impact hypothesis, which proposes that a Mars-sized body collided with Earth; the capture hypothesis, which suggests that the Moon was gravitationally captured from elsewhere in the solar system; the co-formation hypothesis, which posits that Earth and the Moon formed together from the same circumplanetary material; and the fission hypothesis, which suggests that the Moon formed from material that separated from Earth. Among these, recent studies strongly favor the giant-impact hypothesis as the most plausible explanation.

However, for a giant impact to produce a satellite system like the Earth–Moon system, a series of highly specific conditions must be satisfied. First, the mass of the impactor and the geometry of the collision must fall within a narrow range. The impacting body, commonly referred to as Theia, is estimated to have had a mass of approximately 10% that of Earth. This mass is sufficient to eject a large amount of mantle material into orbit without disrupting Earth’s structural integrity. In addition, the collision must have occurred at an oblique angle; a head-on impact would not have generated the debris disk necessary for lunar formation.

Second, the ejected material must have condensed and coalesced rapidly. The Moon must have formed within a limited accretion timescale, before the debris was either dispersed by Earth’s strong tidal forces or reaccreted onto the planet.

Finally, Earth’s rotational speed immediately following the impact must have been sufficiently high to allow the debris to settle into stable orbits beyond the Roche limit. Under these conditions, the material could coalesce into a single large satellite rather than falling back to Earth. Because the parameters governing mass, impact angle, velocity, and timing must all align within narrow constraints, the formation of a massive satellite like the Moon around a terrestrial planet is considered to be a rare outcome in planetary systems.